A Complete Mechanical Solution to the Hard Problems of Consciousness, Part 4

What is it like to be a basketball game?

.14 Redness, Explained

We can now explain the Qualia Problems.

Ultimately, we’re trying to explain why activity in your brain has a feeling—a private texture, flavor, or quality that you and only you immersively experience. In this article, we’ll learn why stuff moving around your head is experienced as redness.

We are now entering the challenging but revelatory heart of solving the hard problems. This article may take more than one pass to digest. I’ve tried to make it as plain and lucid as possible, but if the hard problem was easy to explain then less mathematical folks would’ve cracked it by now. This article took far longer for me to write than I expected, though this is the first time a complete mechanical solution has been written out for this ancient enigma, so I’m learning how to tell the story as I go.

The Hard Problem! Let’s get cracking.

When most folks contemplate the hard problems of consciousness and try to imagine “activity” generating experience, they often get stuck thinking about a single activity, like an electric jolt fired from a neuron. They try to imagine this jolt being conscious.1

A jolt cannot be conscious. Trying to imagine a neural jolt being conscious is an impossible act. Much like trying to imagine how the sun orbits the Earth, or how there can be an invisible force that leaps out of objects to grab other objects.

There is no force: gravity is caused by the bendiness of spacetime.

There is no geocentrism: the Earth orbits the sun.

And there is not a simple activity that generates conscious experience—there is an inconceivably VAST and COMPLICATED tangle of activity that generates experience. Consciousness arises from the tangle, not the jolt.

You really can’t imagine just how horrifically complicated all our brain activity truly is. When I hear folks magisterially sweep away the complicated engineering structure of consciousness and insist, forget all that fancy math and architecture—just explain to me how activity like whistling can be conscious!

I feel like saying, You don’t have any concept how absurdly and dismayingly complex the activity of the human mind truly is!!

The heart of this article is showing why the activity embodying redness feels like redness. But holy macaroni—vision is tough sledding. The amount and variety of brain activity involved to generate conscious sight is preposterously complicated. Grossberg figured most of it out, but there’s not even a good way to summarize it at a high level. Maybe this:

There’s a visual reaching system and a visual recognition system in your brain and both systems must work closely together to get their own jobs done.

What I’m going to do is pick THREE specific forms of brain activity that jointly embody your experience of redness and show how these three mechanical activities feel to a mindless mechanical experiencer.

This account of the activity embodying redness is a simplification, as there are many other brain activities involved with redness besides the three I will share. But these articles do not aim to provide a complete account of vision, nor even a complete account of redness. We’re trying to solve Hard Problem Prime! We’re striving to explain why activity in your brain forms your conscious experience. To that end, we don’t need a complete account of redness, but a complete account of why redness feels red to a mechanical experiencer.

Solving the hard problems unearths a profound truth about consciousness and souls: Conscious experience is mechanical.

This might sound bizarre, impossible perhaps, but as we will see, the physical activity that embodies conscious experience establishes a mechanical analog to every subjective experience. Usually, an embodied thing in your brain that corresponds to a thing out in the world. We’ll need to come up with a good term for this mind-roiling insight—for now, let’s go with “The Mind-Body Mechanical Correspondence Conjecture”:

For every human experience of an object, a physical object forms in the brain that mechanically corresponds in one-to-one fashion with the qualia of the object. Every subjectively experienced feature of the object corresponds to a mechanical feature forged of brain activity.

In this article, we’ll learn how the first-person experience of an activity gets determined by another activity. We’ll learn the mechanical source and mechanical expression of any experience.

We’ll see why resonant activity in the Visual WHERE-is-it module feels like redness to other brain activity. We’ll learn where the subjective red “ink” in redness comes from. We’ll find out why redness activity feels so different than loudness activity—and we’ll also learn why all conscious activity generates a special feeling of potential and possibility—a feeling of awareness.

The goal of this article is to demonstrate why activity feels like something and why redness feels red. Big advances. Yet, at article’s end, there will remain one final unidentified ingredient for the qualia recipe—the most important of all.

It’s one thing to demonstrate how activity feels like redness, as we do in this article. It’s quite another thing to demonstrate how activity in YOUR brain feels like YOUR redness.

The next article will be one you won’t soon forget, pilgrim. It is a rare thing to get exposed to a truly revolutionary reconfiguration of reality.

In the next article, you will learn why all the resonant activity in your brain—redness, loudness, saltiness, stinkiness, speed, warmth, hope, rejuvenation—feels like you. We’ll finally learn the cosmic mechanism that stitches together all the mechanical experiences of all the activity whirling round and round your brain into a single seamless conscious experience inaugurating your soul.

But first, redness. And basketball.

.15 Android Basketball

Let’s visit a basketball game in progress in Madison Square Garden. Two teams are playing: Orange and Green. Orange is home team, so most spectators are Orange fans, but there’s some Green fans in the Garden, too.

We immediately realize there is near-total agreement among third-person spectators regarding the meaning of game activity. The fans all CHEER together in response to certain observed activities, while GROANING in response to other activities. The ten-thousand spectators hold many different perspectives on the game—a full 360 degrees of camera angles circling the court from varying heights—and yet, when an Orange player performs JUMP SHOT ACTIVITY there is overwhelming real-time consensus the activity FEELS GOOD, expressed through arena-shaking roars!

It’s worth observing that while the hard problems of consciousness are befuddling to humans, it’s apparently not too difficult for highly diverse observers of different ages, genders, ethnicities, incomes, and perspectives to all react to infinitely variable activity (basketball activity) in the exact same way.

But let’s not smuggle in any unseemly human feels. We’re trying to explain where experience comes from! No cheating! Let’s make all ten players and all ten thousand spectators robots. Now there’s no “qualia” going on. No warm, gooey experience anywhere! Just bits and bytes whirring through digital CPUs.

How does a robot spectator express its experience of game activity?

Mechanically. Through activity. The robot’s activity. A robot lets us know how it feels about an activity it observes by performing another activity in response.

If a robot third-person spectator evaluates game activity as “positive” it CHEERS by buzzing loudly and moving up and down. If the robot evaluates activity as “negative” it GROANS by hissing air and swinging side to side.

Thus, robots express their reaction to activity through activity.

How does a robot evaluate the meaning of game activity? That is, how does a robot decide what activity to perform in response to experienced action?

One way would be to hard-wire the robot to automatically respond to specific activity in a specific way. You could program the robot to cheer each time it observes a jump shot, for instance. But this is not a path to consciousness.

There’s an infinite variety of potential activities on a basketball court, including rare activities one may not think to pre-program into the robot. What if a player bounces the ball off another player’s head before it goes into the basket?

There’s a more natural mechanical means of determining one’s response to an experience of game activity—even when you’ve no idea in advance what manner of activity you might encounter.

Through purpose.

The key question turns out to be:

Whose purpose?

The game activity’s purpose? No. The experiencer’s purpose.

.16 The First-Person Experience of a Target Activity is Determined by the Experiencing Activity’s Purpose

The purpose of the experiencing activity determines the first-person mechanical experience of the target activity. Does the target activity help or hinder the experiencing activity’s purpose?

Consider a jump shot.

A jump shot is well-defined offensive basketball activity that can lead to points. But does a jump shot feel GOOD or BAD?

It depends on the observer. The jump shot’s meaning is evaluated by an observer relative to the observer’s purpose.

In Madison Square Garden, robot spectators embrace two divergent purposes. The goal of the Orange-fan robots: to watch Orange team WIN!

Green-fan robots are watching the exact same game, but for them the game activity serves a different purpose: a Green team VICTORY!

All we need to program into the robots instead are instructions to evaluate whether observed game activity promotes or hinders the robot’s purpose. If an observed game activity helps its team win, the robot CHEERS! If it hinders victory, the robot GROANS! Now any experienced activity can generate an effective response.

Look! An Orange player is performing a JUMP SHOT. The player shoots the ball and it goes through the hoop! The Orange-fan robot spectators erupt with CHEERS! They experience the jump shot activity as POSITIVE. Madison Square Garden rumbles with sound!

At the same time, the Green-fan robot spectators GROAN. They experience the Orange jump shot as NEGATIVE. Their energetic hisses are mostly drowned out by the Orange-fan cheers. (None of the robots have inner feelings of positive or negative; rather, they generate distinctive activities—CHEERS and GROANS—which physically embody their divergent experiences of the jump shot.)

This shows that the same target activity can generate different experiences depending on the purpose of the experiencing activity, and these divergent experiences are expressed mechanically. Orange and Green fans agree a jump shot is a jump shot, but Orange-fan robots respond with CHEERING ACTIVITY, while Green-fan robots respond with GROANING ACTIVITY.

Before we join the game activity, notice one thing.

The spectators watching the game—even robot spectators—act like AMPLIFIERS of the EXPERIENCE of observed activity. When the entire crowd erupts with cheering, it makes clear to all observers the feeling of game activity. Even if some spectators happened to be looking at their phone during the play, even if a player’s back was to the jump shot, EVERYONE now knows positive game activity happened from the perspective of Orange-fans, because the building is rocking.

.17 First-Person Jump Shots and Blocks

Now let’s move into the game—and your brain. Let’s move from third-person perspective on game activity to first-person perspective on game activity.

How?

By becoming a player in the game. A robot player who plays basketball exactly like a human, but without any experience or feelings happening in its circuitboards. It looks and smells like Steph Curry, and moves like Curry, and makes jump shots like Curry—but inside is only gears and wires.2

There’s one enormous difference between the perspectives of spectators and players on game activity. The fans mostly react to the outcome of game activity. (The jump shot went in: I WILL CHEER!)

But for a player—THE PLAYER IS THE GAME. The player’s activity is the game activity.

Every player movement, every instant, matters. A player adjusts his own activity moment-to-moment in response to other game activity.

This can be seen most clearly if we consider the interaction of two players, an offensive Orange player and defensive Green player. Orange is performing a JUMP SHOT. The purpose of this activity is to THROW THE BALL THROUGH THE HOOP.

Green, however, is performing a BLOCK. The purpose of block activity is to PREVENT THE SHOOTER FROM THROWING THE BALL THROUGH THE HOOP.

Both players are concentrating intensely on every bit of activity of the other player, every instant, to decide what to do next. The shooter tries a pump fake. The blocker isn’t fooled, and sticks with the shooter.

Notice:

The shooting activity responded to the blocking activity (by pump faking) while the blocking activity responded to the shooting activity (by not jumping). EACH ACTIVITY IS RESPONDING TO THE OTHER ACTIVITY IN REAL-TIME.

Now the shooter pump fakes again—and caught the defender, who jumped into the air in an attempt to block a shot that doesn’t come. . . until the defender is falling to the floor, when the shooter finally jumps high into the air and releases the ball over the head of the falling defender. Again, at every moment, the shooting activity is responding to the blocking activity, and the blocking activity is responding to the shooting activity.

The blocking activity’s first-person experience of jump shot activity is given mechanically by the physical trajectory of the blocking activity. The precise movement of the defender as he attempts to block the movement of the shooter IS the mechanical experience of the jump shot, as experienced from the first-person perspective of the defender.

Every position of the blocker’s physical trajectory through space is wholly determined by the blocker’s experience of the shooter. This is true even if the defender is a robot, without gooey inner feelings. The mechanical experience of the robot blocker—the physical incarnation of its first-person perspective on shooting activity—is given by its trajectory.

At the same time, the jump shot activity’s first-person experience of blocking activity is given mechanically by the physical trajectory of the shooting activity, which is reacting at every moment to the blocking activity. The precise physical movement of the shooter as he attempts to score over the blocking movements of the defender IS the mechanical experience of the block, as experienced from the first-person perspective of the shooter.

Thus, purposeful activity creates physical meaning out of its interactions with target activity through its own first-person experience of the target activity.3 Two activities interacting with one another in real-time provide complementary first-person perspectives on the activities, each feeling different to the other.

.18 The Purpose of Color

Now, the qualia of redness.

This requires learning a little about the role of color in the brain. Only a little: the full brain activity involved with color processing is formidably intricate and non-intuitive to someone trained in the physical sciences, and impenetrable to someone untrained in science.

We must always keep in mind that our perception of color—like all brain-mediated perceptions—fulfills explicit mental goals. Goals shaped over eons of gritty evolution. The purpose of your experience of color is not to fill your heart with rainbow joy.

The role of color within your consciousness cartel is twofold: to promote effective object-reaching and effective object recognition.

Color is used by the Visual WHERE-is-it-module to identify and emphasize reachable objects. Color is used by the Visual WHAT-is-it module to recognize objects.

Grossberg discovered a mental tradeoff between the brain’s ability to use color for reaching and recognizing. Getting better at using color for reaching makes it harder to use color for recognizing, and vice-versa. As it turns out, color is more useful for reaching than for recognizing. How do we know?

Because evolution resolved the reaching-recognition tradeoff by favoring brain dynamics that use color for reaching.4

We learned that activity is experienced mechanically relative to the purpose of the experiencer, even basketball activity. At every moment of its unfolding, blocking activity physically experiences shooting activity. A block’s first-person experience of a jump shot is given by the mechanical trajectory of the block.

So what brain activity enjoys first-person experience of the redness activity resonating in the Visual Where module? Who feels the redness?

REACHING activity.

Reaching activity, which unfolds in the HOW-do-I-reach-it module, physically reacts moment-by-moment to the object-highlighting activity of the Visual Where module, including its redness activity, the way a blocker responds to a shooter.

The How module’s purpose is reaching the target. Guiding your hand to an object in your environment. To achieve its purpose, the How module makes use of the activity of the Visual Where module.

Look! You see a red apple sitting on a table. You want to grab the apple. As your How module performs reaching activity by moving your hand toward the apple, the trajectory of your hand depends every instant on the activity of the Visual Where module.

The mechanical trajectory of your hand as it reaches for the apple embodies a first-person experience of the activity in your Visual Where module even though the Where module isn’t moving your hand. This is exactly physically analogous to a blocker’s experience of a jump shot. In fact, it’s not merely analogous—one mechanical component of a human defender’s conscious experience of a jump shot is the defender’s How module moving the defender’s hand toward the blocking activity’s target object: the basketball in the shooter’s hand.

The mechanical expression of how Where module activity feels is given by the trajectory of your hand.

If you ask a defender what it felt like trying to block a shot—what her first-person perspective on shooting activity felt like—the defender might say, “She pump-faked and I didn’t bite and stayed with her and she pump-faked again and I was fooled and jumped and raised my hands to block her but she waited until I was falling and then she jumped over me.” That’s what jump shot activity felt like moment by moment from first-person perspective.

If you could ask the How module what its first-person experience of Visual Where module activity felt like (when How module was reaching for a red apple, for instance), it might say, “There was an intense spatial patch of stable activity surrounded by weaker activity and the intense patch was my target and I kept comparing the attention-getting central activity to my hand position until I grabbed the target activity in my hand and the activity shut off.”

Of course, the How module doesn’t have eyes. It doesn’t see anything. The How module is activity with a purpose. Its purpose being to move your hand to grab the apple. The only “guidance” the How module experiences to know what to aim for is the activity of the Where module.5

Now let’s move inside your Visual Where module and examine mechanical activity generating the first-person experience of redness.

.19 Three Activities of Redness

The purpose of the Visual Where module is to identify and highlight the location of reachable objects in your environment. Redness exists to help the Where module fulfill its purpose.

There are a great many subtle and nuanced activities involved with producing your experience of redness as you reach for a red apple. Red-frequency light directly sensed by your retina is split apart, recombined, rescaled, rebalanced, filled-in, contrast-enhanced, and brightness-enhanced (or brightness-diminished) by brain activity. Even though we’ll use a stripped-down version of the brain’s color activity in this article, it is still extremely laborious and delicate to get at mental redness, because of the outrageous complexity of the human color system6.

In no sense whatsoever does your brain “take the redness” from the environment and show it to you as directly and realistically as possible. Instead, consciousness is designed to structure your perception of reality into a user-friendly format that makes it as effortless as possible for you to perform vital tasks—like reaching for objects.

Your Visual WHERE-is-it module is designed to create an enhanced and annotated version of the visual scene that facilitates the targeting of reachable objects.

Consciousness it the most user-friendly interface ever built. (Who uses this interface onto physical reality? You! We’ll see how this works in the next article.) Evolution spent a half-billion years of module mind evolution making your consciousness interface intuitive, error-tolerant, minimalist, and hyper-efficient.

Now we get to the nitty-gritty of the Qualia Problems. We are going to look at ACTIVITY IN THE BRAIN embodying the REDNESS of a reachable red apple. We will see how other brain activity interacts with the redness activity and mechanically experiences the redness activity from a first-person perspective.

To crack the Qualia Problems, we’re going to examine three redness-embodying activities that resonate in your Visual Where module.

Color Activity

Surface Activity

Distance Activity

And remember—the chief experiencer of these redness activities is your reaching activity. Your reaching activity unfolds in real-time in direct mechanical response to these three forms of redness activity.

The bottom-up REALITY in Visual Where resonance includes surface activity and color activity. The top-down EXPECTATION is made of distance activity.

The Visual Where module’s surface activity structures the visual scene into a Figure and Ground. The Figure is a reachable surface—a red apple, in this case. The Ground is unreachable background.

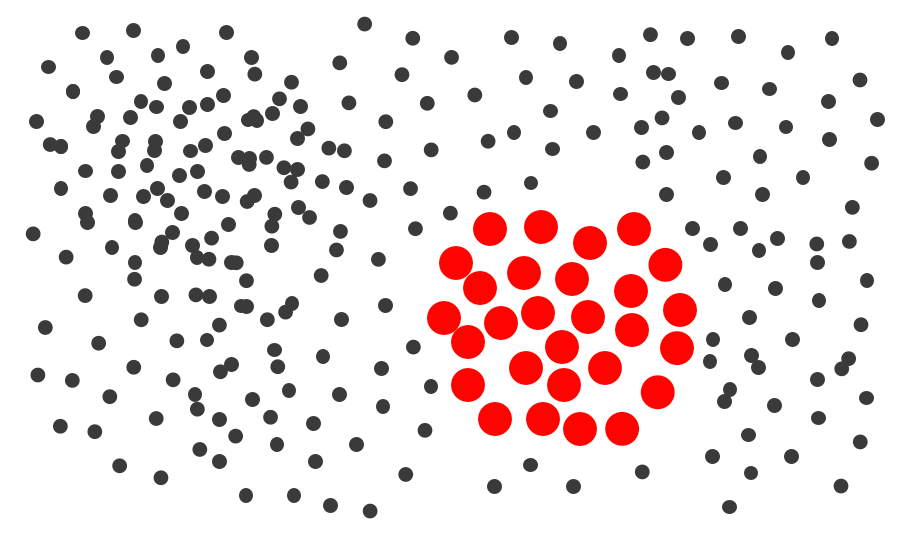

The surface of the apple (the Figure) is embodied in a physical map of spatial neural activity. A literal map: in your brain forms a pattern of neural activity stretched out over a contiguous array of neurons that embodies the perceived geometry of the apple. Neurons are firing in a roundish physical region of your brain corresponding to the round surface of the Figure object (the apple). In particular, the activity in this apple-shaped region is STRONGER than the activity in the surrounding region (embodying the Ground.) This surface activity forms part of the bottom-up REALITY for the Where module resonance.

This apple-shaped array of activity is provided in “retinotopic coordinates.” This means that the activity embodying the surface of the apple draws its boundaries within a retina-based map of the visual scene. (If you look to the left, the apple seems to jump to the right, because you’ve just switched the sides of your retinal map the apple appears on.)

The surface of the apple embodied in Where module surface activity corresponds to an apple-shaped region on the surface of your retina, in perfect one-to-one correspondence.

The Where module amplifies surface activity embodying the apple shape of the FIGURE relative to the activity embodying the GROUND, within this retina-based map of the visual scene.

The top-down Expectation of the Visual Where module is distance activity. Distance activity, like surface activity, is physically embodied in a spatial array of contiguous neurons—a map—in a different part of the brain7.

Whereas the bottom-up surface activity is based on retinal coordinates, the top-down distance activity is based on head-centered coordinates.

Over time, your Where module has mapped out the distance to points in space relative to your head. You “know,” for instance, how far your hand can reach without moving your body, and how far you need to move your body to reach the wall. The coding of distance in distance activity is handled by an activity gradient.8 The neurons in the head-centered spatial array are more active when the corresponding location in space is near compared to when it is far.

In addition to embodying the distance to locations in space, the distance activity is also used by the brain to control looking. During resonance, the Visual Where module creates a top-down apple-shaped overlay of distance activity that perfectly fits the silhouette of the Where module’s selected Figure (the apple). All other distance activity is shut off except for the activity in the apple-shaped distance array. Grossberg calls resonating distance activity an attentional shroud because the activity constrains our gaze to looking at points within the shroud—points that now correspond precisely to the surface activity embodying the Figure (the apple).

The intensity of the resonating distance activity corresponds to the distance to the apple. Because the apple is reachable, the activity will be fairly intense, but not maximally intense.

Why is the shroud important for visual object *RECOGNITION*, even though it's generated by the WHERE-is-it module?

One reason for the existence of a shroud that fixes our gaze within the boundaries of an object is to enable us to learn about new, complex objects—-like a new type of car we’ve never seen before. Once our Where module resonates on the Figure of the car, the shroud constrains our vision to looking within the boundaries of the vehicle. This way, as our eyes jump around and look at different features of the new object—-a pink windshield, triangular wheels, a rear bumper made of sausage—-our Visual WHAT-is-it module can be confident that all these diverse visual features are part of the same object, so the What module can learn to associate this collection of features with a single new category (“Sausage-mobile!”)During resonance, the distance activity interacts with the surface activity to increase visual contrast between Figure and Ground and further enhance the visibility of the Figure. During resonance, the activity corresponding to the surface of the apple increases even more, making the apple-shaped surface activity even more attention-getting.

Okay. Here we go.

How is this surface-distance resonance experienced by other activity? In particular, how does Where’s resonant activity feel to HOW-do-I-reach-it module activity?

.20 What a Surface-Distance Resonance Feels Like

The purpose of HOW-do-I-reach-it module activity is to reach a target object. That is what the How module is trying to do: perform activity that will move your hand to the apple. The How module’s purposeful activity interacts with the Where module resonance to know where to reach. Just as a basketball defender performs blocking activity that responds moment-to-moment to shooting activity, the How module’s reaching activity responds moment-to-moment to the surface-distance resonance in the Where module.

As you reach for the target—the red apple—your How module’s reaching activity experiences the Where module resonance as constrained spatial regions of loud, stable activity surrounded by regions of weak, stable activity—activity whose overall on-center off-surround pattern (mechanically embodying the Figure-Ground) can be used to guide the movement of the hand.

The apple-shaped region of surface activity embodying the red round surface of the apple (mapped in retinal coordinates) synchronizes with an apple-shaped region of distance activity (mapped in head-centered coordinates) that mechanically embodies the distance to the apple within the activation levels of its neuron array. Because these two activities are resonating, whenever other brain activity interacts with Where resonance, the experiencing activity will experience the surface activity and distance activity as a single activity.

Much like a basketball spectator will experience synchronous jump shot activity and blocking activity as the activity of a single play (a defended shot attempt).

In particular, the How module will experience intense reachable-target activity (call it intense purpose-fulfilling activity, if you like) in a confined physical region of the brain (a contiguous array of neural activity embodying the Figure), surrounded by much weaker reachable-target activity (the Ground). This spatially-circumscribed neural activity corresponds precisely to an apple-shaped region on your retina.

But the reaching activity will also simultaneously experience an apple-shaped spatial array of DISTANCE activity. For each “pixel” of the apple impinging upon your retina, there is synchronous activity in your parietal cortex embodying how far away the pixel is.

Just like a basketball defender will avoid moving away from the shooter during blocking activity, if you are reaching for an apple your reaching activity will avoid moving off the edge of the intense synchronous surface-distance activity guiding it.

Another way to think about the mechanical “spatial” experience of surface-distance resonance is to consider activity in a basketball game. Activity is only game activity while it remains within the boundaries of the basketball court. If activity crosses the edge of the rectangular surface it is no longer game activity. Thus, to both robot players and spectators, game activity always feels constrained within a spatial region—exactly as your Visual Where module’s surface-distance resonance feels like to your reaching activity.

Now we can appreciate the cosmic power of resonance. Because of resonance, the reaching activity experiences where in the visual scene to reach and how far away to reach as a single mechanical experience.

That’s because as the reaching activity engages with the Where resonance, it will move around a physical region of high-activation neurons corresponding to the location of the apple on the retina (the surface activity)—but every movement over the surface activity is a precisely corresponding movement over the distance activity. That means the reaching activity will also move over an apple-shaped region of distance activity with activation levels that correspond to the distance to the apple. If the apple gets closer, the distance activations will become more intense. If the apple travels further away, the distance activations will weaken.

As the reaching activity interacts with the distance activity, the How module mechanically experiences the exact level of activation of the apple-shaped map of distance activity in order to determine how fast and how far to move the hand. Weaker activation makes the hand mechanically move faster and further, stronger activation makes the hand move slower and closer. This mechanically embodies an experience of depth.

And the trajectory of your hand embodies the mechanical experience of surface-distance resonance in the Where module, from the first-person perspective of your How module.

But what about RED? How does the color come into the experience of redness?

.21 Red-Boosting For You

Your Visual Where module’s only use for color is to help you reach stuff. That’s all it cares about: will this color activity help me reach objects more effectively?

Your brain twists and stretches color like taffy. I won’t pretend to know all the ways the brain breaks apart, transforms, and reassembles color. Grossberg achieved some pretty sophisticated color dynamics in his later years.

What matters most is that all the color qualia you perceive are ultimately rooted upon your retinal cells’ direct interactions with photons, and it is always possible to mechanically disentangle where and how a particular color transformation from the “original retinal input” happened. However, there are many transformations of color designed to extract as much knowledge from visual perceptions as possible, before color activity joins resonant activity.

The Where module transforms color inputs in a manner designed to make it as easy as possible for you to use the presentation of color to reach for objects.

One way to achieve this is to make the color of the Figure pop. To make the redness of the apple brighter than the surrounding colors. The Where module enhances the color of the Figure and diminishes the color of the Ground, activity which helps provide the Figure-Ground structure in a reachable scene.

The brightened red of the Figure is designed to attract your attention—or, in mechanical terms, to be experienced as attention-getting by other brain activity.

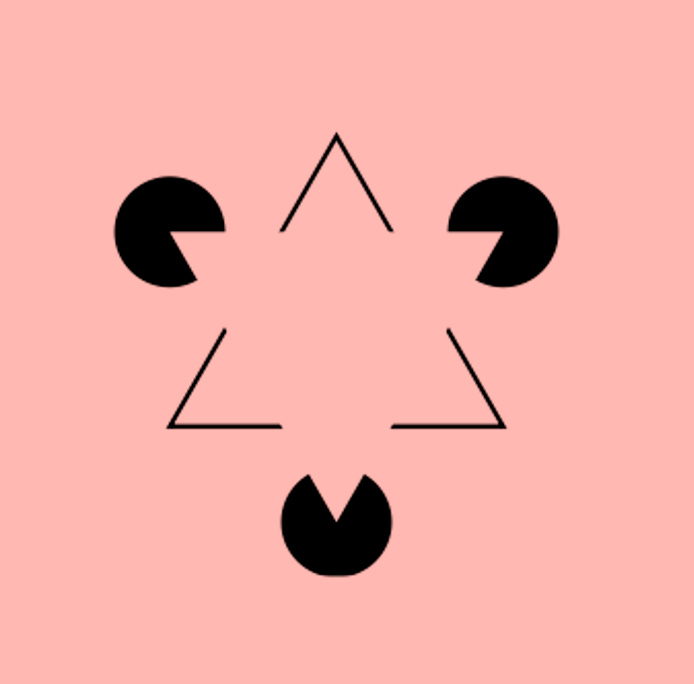

You can vividly experience the “color boosting” activity of your Visual Where module by viewing the illusion below, known as a Kanisza triangle:

Your Where module activity creates a bright “grabbable” triangle that looks like it’s sitting on top of another triangle and three black circles. But more than that—the surface of the illusory triangle POPS OUT. The triangle feels BRIGHTER, more PRESENT—almost TACTILE, though it’s pixels on a screen.

The Where module’s color-boosting activity is designed to make the How module experience the illusory triangle as reachable—and the red apple as reachable.

In the case of the apple, the color activity embodying the apple’s surface redness is STRONGER and more ACTIVE than color activity associated with surfaces in the Ground. When the How module interacts with the resonating Where module, it mechanically experiences the color activity of the apple as MORE INTENSE than any other color activity in the visual scene.

And just as an ENERGETIC JUMP SHOT elicits an ENERGETIC BLOCK, the How module’s activity changes moment-to-moment as it interacts with the Where module resonant activity, naturally linking its own reaching activity to the most energetic activity in the Where module—which happens to be INTENSE SURFACE ACTIVITY, INTENSE DISTANCE ACTIVITY, and INTENSE COLOR ACTIVITY.9

The description so far could work without any “color.” Even a black-and-white image processed by the Where module would still amplify a white Figure against a black ground. So why is there color in the Where module resonance?

One reason is the Visual Where module uses color to synchronize its activity with the Visual WHAT-is-it module. Color is very important for object recognition (is this a small grapefruit or a large orange?) If you want to reach for a known object then you must simultaneously recognize the object and locate the object in space. Color helps coordinate these two activities.

But color also helps with surface filling-in, edge detection, and contrast enhancement. Basically, by enhancing or diminishing the brightness of different colors, it’s possible to make it easier to identify reachable and unreachable surfaces. (Dimmer colors are perceived as further away, for instance.)

This is something that video game designers learned when they began to design three-dimensional “open-world” games you could explore in any direction, like Legend of Zelda, Elder Scrolls, or Fallout. Even though players can go anywhere they want, designers guide players in certain directions using illuminated or high-contrast areas. Players experience these attention-getting regions as visual targets.

They feel like the way to go.

Color is embodied directly in the activity of the spatial neuron array that generates the surface activity. The array, or retina-based map, embodies color at each point in the map through activity which is ultimately linked to the photoreceptors on your retina receiving red inputs directly from the apple. Though these inputs get transformed repeatedly before they reach the Where module, the resonating color activity remains rooted on the photoreceptor activity experiencing light from the apple.

When reaching activity, which seeks to guide your hand to the target apple, experiences resonating Where activity, it mechanically feels like there’s a spatial region of strong activity surrounded by weaker activity. This strong activity is experienced both spatially—where on the retina is the activity?—and in depth—how far away is the activity? The redness activity is also experienced as a spatial region of object-associated activity (“apple” activity).

But redness is also experienced as a color.

“Okay,” you might say, “I can see how one region of brain activity can be stronger than another region, leading to an experience of spatially restricted attention. But where does the red come from in redness qualia?”

.22 The Invention of Color

Explaining how activity can feel like the color red is a little different than explaining how activity can feel like a surface, an intensity, or a distance, all of which are mechanical in a straightforward way. For these latter examples, the mechanical form of the activity directly determines the mechanical experience of the activity, the same way the trajectory and intensity of a jump shot determines the trajectory and intensity of the block.

Mentally, color is a category with both analog and digital properties. It’s possible to gradually change a color through barely perceptible gradations and be aware that the color is changing slowly. But as a supermind, we tend to divide colors into about a dozen basic hues, less in other cultures. Redness is a word, but not carmineness, amaranthness, and oxbloodness, because all human superminds use “red” as a basic category of color.

Here’s what’s important to recognize about how your brain experiences color: all color is “artificial.” Synthetic. Processed. You always see “fake colors” because your brain recolors every scene to suit your needs.

One major transformation your brain performs on the color of a visual scene is discounting the illuminant. This involves processing the entire visual scene—everything within view—and rescaling brightness and color across the entire scene. This is done primarily to remove the influence of any colored light source—what matters for object recognition is knowing the “true” color of the object. (For instance, if a green light was shining on a red apple, the process of discounting the illuminant would render the apple red to your perception.)

What matters for qualia is that this scene-wide visual transformation (along with other scene-wide transformations) alters the perceived color all over the scene, including altering the perceived relationship between different colors (altering, “Which color is brighter? Which color is closer?”)

The point is, the brain manipulates color activity to suit its contextual needs. It makes the colors of reachable objects brighter and the colors of unreachable objects dimmer. It diminishes or removes colors it thinks may be distracting or uninformative.

All right. So where does the red “ink” in my red qualia come from? Why does redness have such a specific experiential quality of red to it? Why can I imagine that my redness could be your blueness?

.23 The Qualia of Redness

Colors in a visual scene are always relative. Indeed, a good way to describe what the visual system does is constantly calculate the relative color of everything, then change the relative color of everything. The goal of the Visual Where module’s color modifications is to make it as easy as possible to reach stuff, which means altering the relative colors to make reachable stuff more salient than non-reachable stuff.

We can only perceive colors clearly when they are relative to other visible colors. If you look at a visual landscape that is all one color—any color—after a few moments, your color experience will transmogrify and become hallucinogenic. This is called the Ganzfeld effect. Visuals become trippy because there is no relative coloring in the scene and the brain desperately hunts for any variations in the color, no matter how subtle, to use to build up color contrasts and consecrate a Figure and Ground out of homogeneity.

The role of different colors in the Visual Where module is to help distinguish reachable surfaces. The Where module increases the visual contrast between the redness of a reachable apple and the colors of the surrounding Ground to make the apple feel even more salient from the first-person perspective of reaching activity, while preserving object properties (COLOR) needed to coordinate reaching activity with recognition activity in the WHAT-is-it module.

Holy guacamole, look at all we had to go through just to think about the activity involved with producing redness qualia! And this is the super-streamlined version. The minimum we need to solve the hard problems. It would be great to say “Forget all that fancy stuff—just focus on the experience of red itself. What is the experience made of?”

That’s like saying, “Forget all that fancy stuff about basketball—just focus on the jump shot itself. What is the jump shot made of?”

Celtics basketball star Jaylen Brown once said of a teammate’s jump shot, “Hang it in the Louvre!” But consider what a jump shot would actually look like in the Louvre—divorced from all context. A man jumping with a ball and releasing it.

Activity without meaning.

Any experience of a jump shot in the Louvre will be wholly divorced from purpose—divorced from the purpose of basketball game activity (the Louvre jump shot has nothing to do with winning) and divorced from the purpose of a shooter (there’s no scoring in the Louvre). Even if a Green-fan watched a Green player perform a jump shot in the Louvre, the fan will not experience any game activity and will not experience a fan’s purpose (watching Green team try to win).

Trying to isolate “redness” as a subjective experience, divorced from any understanding of the mechanical activity embodying the experience, is like philosophizing a jump shot in the Louvre without any knowledge whatsoever of the game of basketball. Redness always occurs within the flow of mental purpose, an activity within activity experienced by other activities—just like a jump shot occurs within the flow of game purpose, game activity experienced by other game activity.

And the jump shot only has meaning within the flow of game activity, just like the letter Q only has meaning when combined with an alphabet and a language. A letter by itself, without any language around it—like Ꭳ (a letter in the Cherokee alphabet)—has no meaning.

The meaning of redness DURING REACHING arises from redness’ role in helping us achieve our goal of reaching a red object. It is purposeful activity arising within purposeful activity and experienced by other purposeful activity, and all of these mental dynamics are united in pursuit of common purpose (keeping you alive).

Conscious experience generates qualia that always exists relative to a purpose. Just as jump shot activity makes a defender perform block activity, the redness of an apple makes a mind perform reaching activity. The reaching activity is in direct, intimate, moment-by-moment conversation with the redness activity and the first-person experience of the redness is expressed mechanically in the trajectory of the reaching activity.

Another key insight into color qualia comes from color blindness. Folks who are color blind can’t distinguish certain colors, like red and green, that other folks can. Yet color blind folks don’t have any trouble reaching for objects. This shows that the Visual Where system will use any relative color information available to carve up a visual scene into reachable and unreachable surfaces.

Color blindness also provides a clear answer to the question of whether my redness is the same as your redness: NO. A color blind person cannot distinguish red from green. Their experience of color is different from mine, because I can distinguish red from green, and subjective color is always relative.

But what about non-colorblind folks? Do we all share the same red?

The color blindness case already demonstrates that if you change the mechanical brain activity for processing color, you change the experience of color.

That means that it’s impossible for two people to share the same precise subjective experience of redness, because the mechanical activity embodying redness varies wildly from person to person. There’s two mammoth sources of variability in brain activity, including color activity. First, any divergence in the physical structures of two brains will lead to divergent brain activity. Change a single neuron, and you’ll get different activity. Second, any divergence in the behavior of two identical brains will lead to divergent brain activity. Go left instead of right, and you’ll end up with different brain activity.

The math is simple: Different mechanical activity = different first-person experience of that activity.

In reality, human brains are extraordinarily diverse in their structure and flow. The way a brain performs a particular mental activity (such as processing color, or remembering words) varies tremendously from person to person, because every physiological element of the human body, even molecular elements, varies wildly.

Every athlete performs a jump shot differently. That means that every first-person mechanical experience of a jump shot varies from shooter to shooter. It’s the same with redness: each of our brains performs color activity differently, so we each have a different first-person experience of our color activity. Consider the 2015 Internet meme of “The Dress,” a blue-and-black dress that 72% of viewers initially perceived as white-and-gold.

In truth, your own experience of redness changes from scene to scene, and day to day. How can you even know you’re seeing “the same redness” today you did yesterday? What could you use as an objective yardstick for comparison? All your mechanical brain activity changes over time, from experience and age and the everchanging universe. And every single visual scene you look at gets carved up and transformed in countless ways, with colors enhanced or muted under variable lighting conditions.

Red is forever fluid and contextual.

Your qualia of redness in any experience—such as reaching for the red apple—is always and inextricably determined by the other colored surfaces in the scene. Where does the English label “redness” even come from then, if color is always relative? From the needs of the WHAT-is-it module, which connects FEATURES and CATEGORIES. RED is a crucial feature to use to distinguish and discuss fruit, for instance, and so red needs to maintain constancy across objects for recognition purposes.

But from the first-person perspective of the Where module, as long as the Where module can be confident it’s reaching for the RIGHT object (which color helps to verify), the whole point of the Where module SHOWING you color is to help you reach for stuff, which means what matters most is that RED is not GREEN, BLUE, or YELLOW. It is the differences that matter for reaching and recognition.

These differences are ultimately rooted in different patterns of activity in retinal photoreceptors.

Why is color experienced as a continuous spectrum?

The wavelength of light varies mechanically and continuously, and the human visual system is able to distinguish very fine changes. For useful object recognition purposes, it is necessary to experience color on the same ordered continuum as it is reflected in nature, even though the mechanics of the visual system do not allow for precise physical reproduction of the continuum—only synthetic reproduction. (You should always be able to move from green light to violet light through blue light, because the wavelength of violet is between green and blue light.)

But object recognition also benefits from discrete color labels or categories. Knowing that ripe apples are red, while unripe apples are green is extremely useful information—and information that can now be easily shared, with supermind terms for red and green, private visual experiences now given public labels.

Why is color experienced as a discrete experience, and where does the particular “ink” come from? Why is “redness” RED and “greenness” GREEN?

Color is artificial, remember. All the smooth surfaces you see are artificial, with the color “airbrushed in” by your brain. Artificial color is always relative. (If you don’t show multiple colors, you get the hallucinogenic Ganzfeld effect.) What you feel is red in one scene will feel quite different under different lighting conditions and with different colors around it. Nevertheless, there is a color. Where does the color in the color qualia come from, in any given visual scene?

From the distinctions in the precise permutation of photoreceptor activity harnessed for a particular purpose and the variations in the intensity and distribution of that harnessed photoreceptor activity—both of which involve inconceivably massive numbers of microactivities. But according to the Mind-Body Mechanical Correspondence Conjecture, each subjective experience of color is embodied in a stable mechanical analogue of the color that can be experienced by other mechanical brain activity.

Thus, our experience of the RED in redness is rooted upon two resonating activities. First, PHOTORECEPTOR-MODDING ACTIVITY that involves vast numbers of molecular activities united into a single stable embodiment that is experienced as a CATEGORY or DISTINCTION based on previous learning. Second, supermind VERBAL ACTIVITY that is experienced as both the WORD “red” and KNOWLEDGE of “redness” (“This visual qualia can be used to identify fire trucks and blood and APPLES!”)

So here we go. Redness explained:

The How module’s reaching activity mechanically experiences the activity embodying “redness” as:

spread out over a spatial region

stable and invariant

yet able to change continuously over a spectrum

seen before in similar contexts

distinct from that other color activity over there

“Hey, I know what that is—that’s ‘RED’ apple color!”

The first-person mechanical experience of all this activity—STABLE and AMPLIFIED and SYNCHRONIZED in Where module resonance—is redness.

.25 The Empty Feeling of Reach

But how does reaching activity feel? How does How-do-I-reach-it module activity feel from the first-person perspective of redness activity?

The same way air does to a basketball player.

The reason it is possible to experience redness is because the brain activity embodying redness resonates. When brain activity resonates it becomes louder and longer. Resonance manifests as a mechanical THING in the brain.

Like a basketball, in fact.

As it happens, one great way to think about consciousness-embodying resonance is to think of resonance as the basketball in a basketball game.

Wherever the ball goes, the attention of the entire game follows, because the attention of all ten players and all ten thousand spectators is on the ball. That means that—mechanically—the game is aware of the basketball.

The game is not aware of the empty air directly behind the backboard.

A basketball endures over time as stable mechanical activity. (Technically, solid-state molecular activity.) In contrast to, say, a player’s shout—mechanical activity that is unstable and transitory.

When reaching activity experiences resonant activity, the resonant activity feels stable, relative to the purposeful action of the reaching activity. The apple-shaped surface feels stable. The red color feels stable. During the entire time the reaching activity is reaching for the apple, the reaching activity experiences invariant resonant activity.

Like a game experiences a basketball.

But what about the reverse?

Reaching activity is not stable. There is no resonance in the How module. Reaching activity calculates the distance between the location of the target (the apple) and the location of the reaching hand and at every instant drives its activity to reduce this distance to zero. Every moment Where module activity experiences How module activity, the How module activity is changing. It is not stable. It is like a shout or a breeze.

Or like empty air—not a basketball.

There is no qualia for the experience of reaching because there is no stable reaching activity to experience while other activities pursue time-consuming purpose.

.26 Consciousness Creates a Mechanical Degree of Freedom

Regarding the purpose of consciousness, you might agree that consciousness cartel dynamics evolved to solve the three dilemmas of monkey minds and that resonance embodies experience—but still feel we haven’t explained why the uncanny experiential quality of consciousness itself serves an evolutionary purpose. Let’s do so now.

We are going to explain away the Zombie Problem again—“Consciousness doesn’t seem to have any purpose!”—but this time, we’ll put it to bed for good.

We saw how all qualia feels “thinglike” because of the mechanical nature of resonance. Qualia has other universal qualities, and one of these qualities got put there intentionally. The hard-to-describe feeling of possibility or potential or opportunity. A chance to act.

When we experience something, such as redness, our awareness of redness makes us feel we could touch it, talk of it, identify it, change it. A red apple feels like a potential for action in our cognizance. Does this expectant feeling of awareness serve an evolutionary purpose?

Yes.

Every resonant state in the consciousness cartel is designed to create a new mechanical degree of freedom for mental action. This added freedom inaugurates the possibility of conscious choice.

A good example is the Visual Where module. Its purpose is to IDENTIFY AND HIGHLIGHT REACHABLE OBJECTS. To grab something, as we just witnessed, your How module relies on Where module activity.

The Where module refashions a scene into a reachable Figure and unreachable Ground, presented to the How module for reaching. But to make the CHOICE OF REACHABLE OBJECT more conspicuous, the Where module actively amplifies the surface brightness to make the Figure pop in your awareness, as we saw in the Kanisza illusion:

You feel like you can grab that triangle—that’s the Visual Where module fulfilling its evolutionary purpose: making it as easy as possible for you to consciously see what to grab. Your brain has literally fabricated a visual choice out of thin air by manufacturing an artificial visual element and making it mechanically flagrant. The Where-boosted triangle provides the distinct feeling of potential action:

I could grab that thing!

. . . even though that thing is not really there.

The feeling of awareness (“I could grab that thing!”) evolved to guide your attention to choices likely to be useful.

Consciousness is not designed to provide you with an authentic copy of reality. Every conscious experience is designed to highlight potential choices (created by the module’s extra degree of freedom!) and make them vivid and actionable. Consciousness is like a synthetic overlay over reality, like Google’s traffic overlay over its Satellite Map view showing authentic streets with arrows and markers printed over them.

Except in your case, the synthetic overlay is your conscious reality.

.27 The Qualia Problems, Explained

Is my redness the same as your redness?

Nope. Every redness is different, as every jump shot is different. Even the same player’s jump shot is different from game to game, even from blocker to blocker. In the same manner, the redness you experience changes from viewing to viewing, just like every time you hear a different person speak the word “hello” it sounds different.

Your experience of redness is always relative to purpose, so as your purpose changes, redness changes.

Why is the experience of redness so distinct from the experience of loudness?

Mechanically, it’s like comparing jump shot activity and blocking activity. Though they are both game activities, both involved with helping teams win, they pursue different purposes and do so using different mechanical action. (For one thing, jump shot activity protects the ball, while blocking activity attacks the ball.)

Redness activity in the brain is very different than loudness activity. Redness activity involves the resonance of surface activity and distance activity, while loudness involves the resonance of spectral pitch activity and timbre activity. Redness and loudness activity take place in different structures with vastly different subdynamics.

The reason that redness and loudness are so different is because of the nature of the cosmos. Sight is based upon electromagnetic radiation, the fastest stuff in our reality. There’s inconceivably vast amounts of it all the time and because it’s so fast it’s stable to our minds, forming stable structures like surfaces and edges and objects. Hearing is based upon air vibrations. It’s far slower, it’s rarer, and there’s a lot more interference. Sound forms unstable, ephemeral structures with a beginning and an end.

Thus, the brain dynamics necessary to implement sight and hearing need to specialize for markedly different mediums of perception.

Nevertheless, even though the underlying mediums of perception vary between senses, the brain sometimes uses divergent mediums for the same purpose—such as identifying and highlighting reachable objects. The Audio Where-is-it module also serves the same purpose as the Visual Where-is-it module, it just uses sound instead of light to highlight objects for reaching.

If you wished to describe redness to a blind person, you could compare it to timbre, which is used in the exact same way by the brain for object recognition. If you wished to tell a blind person what loudness is like visually, it’s like brightness. These pairs of dynamics—color and timbre, brightness and loudness—are used and experienced in similar ways by other brain activity, even though the underlying activity is quite different—again showing how your experience of any qualia is determined by both the mechanical embodiment of the target activity and the mechanical purpose of the experiencing activity.

You seem to be suggesting that we somehow “ignore” the red color in redness itself. But if I wish, I can focus on the “red” to the exclusion of everything else about the apple. How would you explain the redness when I do that?

One of the reasons that the engineering design of consciousness is so powerful is that it allows you to put ideas together easily and pull those same ideas apart easily. Every resonance combines one set of ideas with another set of ideas in a manner that also allows you to focus attention on any specific mechanical detail of those ideas.

This is what happens when you focus exclusively on the “redness” of a red object, like an apple. The brain activity that embodies the redness (a spatial array of neurons whose activity reflects some huge combination of heavily processed micro-activities across the retina) becomes the new “Figure” in your attention, and you are exclusively (or near-exclusively) aware of the RED (really just a red surface with large intense surface activity resonating with super-intense distance activity) and can marvel at just how red the redness is. As you marvel at the redness, your object recognition activity in your Visual What module is moving around the array of resonating color activity in your Visual Where module to fulfill your chosen purpose of recognizing the redness

—"Oh that is so very APPLE red!”

Now we’re ready for the mountain top. Good news: our ascent to the summit will be easier than climbing through this article. But the promised view, pilgrim, is spectacular.

The next article showcases the cosmic mechanism that unites all the mechanical “micro-experiences” in your brain into your experience.

It is astounding.

It solves Hard Problem Prime. Hooray!

It also explains concrete engineering details of the technology that intex uses to communicate with me.

As astounding as both of those are, the solution to Hard Problem Prime—if it’s correct, and it is correct, but you will soon judge for yourself—incontrovertibly demonstrates how purposeful dynamics are as influential on the evolution of reality—if not more influential—as physics.

Indeed—I don’t see how one solves the hard problems of consciousness without recognizing that physics ensues, purpose pursues.

This is an unfortunately stubborn “reductionist fallacy” inherited from the physical sciences. Physics achieved great success with toy problems, trying to make things as simple as possible and working out the simplest relations, before building up to more complex phenomena. But this is the exact opposite of how to approach consciousness.

Yes, this is David Chalmer’s zombie. Perfectly human-like robot basketball players don’t exist, though, crucially, it doesn’t matter for the explanation of consciousness.

This is the path to godhood.

It’s a little complicated to explain the details, but basically, the trouble is in identifying “partially occluded” objects—such as a bottle standing behind a tissue box. You can see the top of the bottle, but the bottom is hidden behind the box. As it turns out, the VISUAL WHAT-is-it module tries to RECOGNIZE the hidden bottle by COMPLETING THE HIDDEN EDGES AND SURFACES OF THE BOTTLE. But this risks making the box look “transparent” as if you could reach through the box to grab the bottle (using your Visual WHERE-is-it module).

To resolve this design tension, evolution favored REACHING over RECOGNITION, as it chose to make reachable surfaces MORE VISIBLE AND ATTENTION-GETTING rather than making it MORE EASY TO RECOGNIZE OCCLUDED OBJECTS.

There’s also an Audio Where module that the How module can make use of, in addition to the Visual Where module.

Every human brain system is absurdly complex, so the complicated color activity is not an exception.

Surface activity is embodied in V4, distance activity in the posterior parietal cortex.

Using a gradient allows the visual localizing system to adapt quickly to dramatic changes in the size of the surrounding space, such as stepping out of a small lobby into a giant park.

(During resonance, the distance activity also enhances the Figure’s color activity even further.)